Retrieve profiles websites

Retrieve Profiles & Websites DAG¶

The retrieve_profiles_websites DAG is responsible for retrieving profiles and their associated websites from a Webserver API. It processes the retrieved data by either inserting new records or updating existing ones in the Crawlserver MongoDB database. Additionally, it creates jobs for both profiles and websites based on available tasks.

Parameters¶

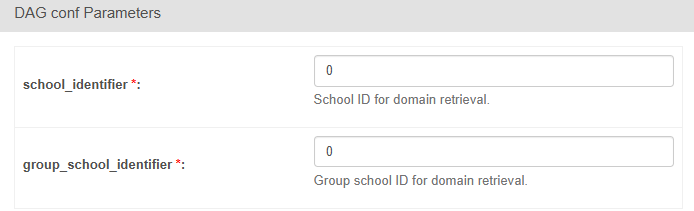

The DAG accepts two parameters:

- school_identifier (int): School identifier for a profile.

- group_school_identifier (int): Group school identifier for a profile.

Tasks¶

The DAG consists of 4 PythonOperator tasks:

1. validate_params¶

- Validates input parameters using

validate_input_params_profile. This task ensures that eitherschool_identifierorgroup_school_identifieris provided, but not both, before any processing begins.

2. get_auth_token¶

- Retrieves an authentication token from the Webserver. This token is stored in XCom so that it can be used by subsequent tasks to authenticate API calls.

3. process_profiles_and_jobs¶

- Calls the API to fetch profiles data.

- Processes each profile by either updating an existing record or inserting a new one into the

childs_profilecollection. - For newly inserted profiles, it creates associated jobs (e.g., for profile crawling) based on available tasks from the

core_taskscollection.

4. process_pages_and_jobs¶

- Retrieves website (page) data from the API (using data pushed by the profiles task).

- Inserts or updates website records in the

websitecollection. - For new pwebsites, creates jobs (e.g., for publication crawling) based on available tasks.

Cleaning XCom¶

- Once the DAG execution is successfully completed, all XCom entries related to the DAG run are cleaned up to maintain a tidy state.